Making a simple web scraper with an interface using RapidAPI and Next.js

Wanna scrape? You’ve come to the right place

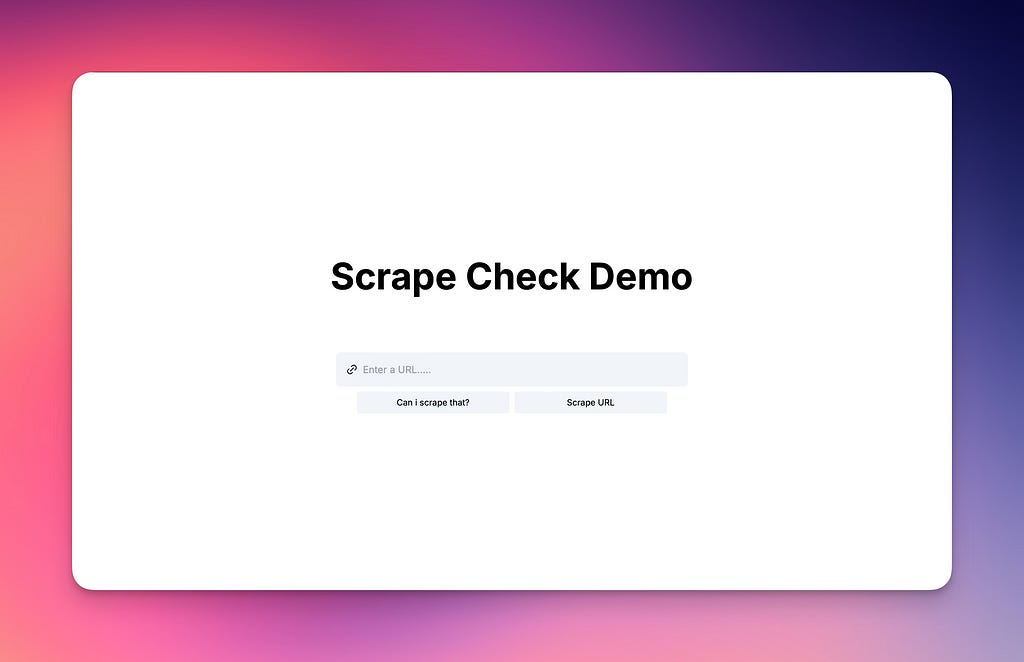

The demo is publicly available @ scraper-demo.andriotis.me and github.com/andriotisnikos1/scraper-demo

So. You want to scrape some websites but don’t know where to start? Ive got you. I believe you have read the title, so i won’t repeat myself.

What you’re gonna need:

A free subscription to the web scraper and your API Key

Node.js and NPM (Who doesn’t have that IK)

20 minutes of your time

Lets get to it!

Preparation: Getting the RapidAPI API Key

Head over to https://rapidapi.com/developer/dashboard

On the sidebar under “My Apps” select one and head to Authorisation”. If you don’t have a project, create one

Copy your API Key. If you don’t have one, create one with the “RapidAPI” scope

Part 1: Initialising the project

Navigate to your workspace folder. Mine is “~/workspace”

Initialise a project with

npx create-next-app --ts scraper

cd scraperor if you’re feeling cheeky:

yes | npx create-next-app --ts scraper

cd scraperthis will answer all prompts with “y”, something that we need

Now, add your API Key to .env:

echo "RAPIDAPI_KEY=<your key here>" > .envDependencies

npm i axios sonner @radix-ui/react-iconsPart 2: Server Actions

The API currently supports 2 endpoints, /check and /scrape. Here’s some info about them:

/check Checks if a URL can be scraped, according to the URL’s policies.

/scrape Scrapes a URL, processes it according to the rules

Make the actions.ts file

touch app/actions.ts2. Add these functions:

"use server" // initialise as server file

import axios from "axios";

// checks if the input URL can be scraped

export async function scrapeCheck(url: string) {

try {

const options = {

headers: {

'X-RapidAPI-Key': process.env.RAPIDAPI_KEY,

'X-RapidAPI-Host': 'crawl-and-scrape-check.p.rapidapi.com'

}

};

// build the request URL

const builtURL = new URL("https://crawl-and-scrape-check.p.rapidapi.com/check")

builtURL.searchParams.append('url', url)

builtURL.searchParams.append('agent', 'ScrapeBot') // you can change the value of this

const res = await axios.get<{ allowed: boolean }>(builtURL.toString(), { headers: options.headers });

return res.data.allowed

} catch (error) {

console.error(error)

return false

}

}

// sends scrape request

export async function scrapeURL(url: string) {

try {

const options = {

headers: {

'X-RapidAPI-Key': process.env.RAPIDAPI_KEY,

'X-RapidAPI-Host': 'crawl-and-scrape-check.p.rapidapi.com'

}

};

const builtURL = new URL("https://crawl-and-scrape-check.p.rapidapi.com/scrape")

builtURL.searchParams.append('url', url)

// you can change this to an element name

builtURL.searchParams.append('selector', 'body')

// you can change this to comma-seperated element names

builtURL.searchParams.append('unwantedFields', 'link,script,head')

const res = await axios.get<{

content: string

}>(builtURL.toString(), { headers: options.headers });

return res.data.content

} catch (error) {

console.error(error)

return null

}

}Part 3: layout.tsx

We are starting to approach the HTML part of this demo. Add these styles to your <body> tag in layout.tsx :

min-h-full flex flex-col w-full items-center justify-center space-y-20This should be the JSX in layout.tsx :

<html lang="en" className="h-screen">

<body className={inter.className + " min-h-full flex flex-col w-full items-center justify-center space-y-20"}>{children}</body> // change this line

</html>Part 4: Form Actions

In this part, we add the form actions that will run when we press the “Check” and “Scrape” buttons

In your page.tsx file, remove all imports and add this:

"use client"

import { Link2Icon } from "@radix-ui/react-icons";

import React, { useState } from "react";

import { toast, Toaster } from "sonner";

import { scrapeCheck, scrapeURL } from "./actions";

// check url scraping

async function checkURL(f: FormData) {

// get url

const inputUrl = f.get("url")

if (!inputUrl) return toast.error("Please enter a URL", {duration: 5000});

// scrape check

toast.loading("Checking if scraping is allowed...", { duration: 5000 });

const res = await scrapeCheck(inputUrl as string);

// result

if (res) toast.success("Scraping is allowed", {duration: 5000});

else toast.error("Scraping is not allowed", {duration: 5000});

}

// scrape URL

async function scrape(f: FormData) {

// get url

const inputUrl = f.get("url");

if (!inputUrl) return toast.error("Please enter a URL", {duration: 5000});

// scrape

toast.loading(`Scraping ${inputUrl} ...`, {duration: 5000});

const res = await scrapeURL(inputUrl as string);

if (!res) return toast.error("Failed to scrape the URL", {duration: 5000});

// download

const blob = new Blob([res], { type: "text/plain" });

const url = URL.createObjectURL(blob);

const a = document.createElement("a");

a.href = url;

a.download = `scraped-${f.get("url")!}.html`

document.body.appendChild(a);

a.click();

window.URL.revokeObjectURL(url);

}Lets talk a bit about these actions:

checkURL takes the URL from the form and outputs the result of the check as a toast

scrape is a bit more interesting. It sends a request for a file scrape then checks if it was successful. In case that’s true, it creates a file and sends it for download!

Part 4: JSX

Finally, replace the JSX part of your page.tsx with this simple form:

export default function Home() {

const [url, setUrl] = useState("");

return (

<React.Fragment>

{/* Title */}

<h1 className="md:text-[60px] text-[30px] font-bold">Scrape Check Demo</h1>

<div className="flex flex-col w-full items-center justify-center gap-2">

{/* Main Input */}

<div className="flex w-11/12 md:w-2/5 items-center space-x-2 p-4 bg-slate-100 rounded-lg">

<Link2Icon className="h-5 w-5" />

<input type="text" placeholder="Enter a URL....." className="outline-none bg-transparent w-4/5" onChange={(e) => setUrl(e.target.value)} />

</div>

<div className="flex items-center gap-2 px-2 flex-col md:flex-row ">

{/* Check Button */}

<form action={checkURL}>

<input type="text" name="url" hidden readOnly value={url} required />

<button type="submit" className="w-[250px] p-2 text-sm bg-slate-100 rounded-md ">Can i scrape that?</button>

</form>

{/* Scrape Button */}

<form action={scrape}>

<input type="text" name="url" hidden readOnly value={url} required />

<button type="submit" className="w-[250px] p-2 text-sm bg-slate-100 rounded-md ">Scrape URL</button>

</form>

</div>

</div>

<Toaster />

</React.Fragment>

);

}Your page.tsx should look like this:

"use client"

import { Link2Icon } from "@radix-ui/react-icons";

import React, { useState } from "react";

import { toast, Toaster } from "sonner";

import { scrapeCheck, scrapeURL } from "./actions";

// check url scraping

async function checkURL(f: FormData) {

// get url

const inputUrl = f.get("url")

if (!inputUrl) return toast.error("Please enter a URL", {duration: 5000});

// scrape check

toast.loading("Checking if scraping is allowed...", { duration: 5000 });

const res = await scrapeCheck(inputUrl as string);

// result

if (res) toast.success("Scraping is allowed", {duration: 5000});

else toast.error("Scraping is not allowed", {duration: 5000});

}

// scrape URL

async function scrape(f: FormData) {

// get url

const inputUrl = f.get("url");

if (!inputUrl) return toast.error("Please enter a URL", {duration: 5000});

// scrape

toast.loading(`Scraping ${inputUrl} ...`, {duration: 5000});

const res = await scrapeURL(inputUrl as string);

if (!res) return toast.error("Failed to scrape the URL", {duration: 5000});

// download

const blob = new Blob([res], { type: "text/plain" });

const url = URL.createObjectURL(blob);

const a = document.createElement("a");

a.href = url;

a.download = `scraped-${f.get("url")!}.html`

document.body.appendChild(a);

a.click();

window.URL.revokeObjectURL(url);

}

export default function Home() {

const [url, setUrl] = useState("");

return (

<React.Fragment>

{/* Title */}

<h1 className="md:text-[60px] text-[30px] font-bold">Scrape Check Demo</h1>

<div className="flex flex-col w-full items-center justify-center gap-2">

{/* Main Input */}

<div className="flex w-11/12 md:w-2/5 items-center space-x-2 p-4 bg-slate-100 rounded-lg">

<Link2Icon className="h-5 w-5" />

<input type="text" placeholder="Enter a URL....." className="outline-none bg-transparent w-4/5" onChange={(e) => setUrl(e.target.value)} />

</div>

<div className="flex items-center gap-2 px-2 flex-col md:flex-row ">

{/* Check Button */}

<form action={checkURL}>

<input type="text" name="url" hidden readOnly value={url} required />

<button type="submit" className="w-[250px] p-2 text-sm bg-slate-100 rounded-md ">Can i scrape that?</button>

</form>

{/* Scrape Button */}

<form action={scrape}>

<input type="text" name="url" hidden readOnly value={url} required />

<button type="submit" className="w-[250px] p-2 text-sm bg-slate-100 rounded-md ">Scrape URL</button>

</form>

</div>

</div>

<Toaster />

</React.Fragment>

);

}This should be the output when you visit localhost:3000:

Reminder: You can also visit scraper-demo.andriotis.me

Now for the last part: Usage

Enter a URL on the field

Click either “Can i scrape that?” or “Scrape URL”

You’ll get your response via toast!

Thanks for reading!